Theory and Specification of a Digital Video

Content

of the lesson:

Content

of the lesson:

- Frame Frequency

- Interlacing an Image

- Video Resolution

- Aspect Ratio

- Data Flow

- Formats (audio and video) /most used/

- Codecs

- The Difference between Formats and Codecs

- Multimedia Containers /most used/

Frame Frequency

Frame frequency is a frequency at which an imaging device produces separate unique images or a recording device produces images. Frame frequency is usually expressed in fps (from an English frame per second) or simply in hertz. In both cases the unit corresponds to one image per one second.

Frame rates in film and television

In film, television and in video processing there are several commonly used units of frame frequency: 24 Hz, 25 Hz, 30 Hz, 50 Hz, a 60 Hz.

- 24 Hz is a frequency, which is mostly used in film industry. During screening a double frequency is used and each image is screened twice

- The television format PAL has 50 half-images. It means 25 images per second (50 Hz)

- The television format NTSC has 59,94 half-images per second (preciously 60 Hz/1,001)

source: Wikipedie otev½enà encyklopedie

Interlacing an image

When we are processing a video shot by a digital camcorder bought in Europe, the recording will probably correspond to PAL television standard. It is marked out by 25 fps frame rate and the resolution of image is 720 x 576 pixels, no matter whether we are shooting in the standard (4:3) or in wide screen (16:9) aspect ratio. The mentioned 25 fps consists from 50 half-images (50i "i" as "interlaced"), that create the image. One half-image consists of only odd/even lines of the image. That could, at first sight, correspond to 25 full images (25p, "p" as "progressive"), but it is not like that. If we recorded a video as 25 full images, we would see objects frozen in one position after stopping the video ("the frozen position" is, of course given by the quality of a camcorder) with the distance of 0,04 second (1/25) (see picture no. 1). Since the recording is shot in half-images with the distance of 0,02 second (1/50) and odd and even lines are drawn simultaneously, we can see in one sequence an interlaced image which was not shot at the same time (see picture no. 2).

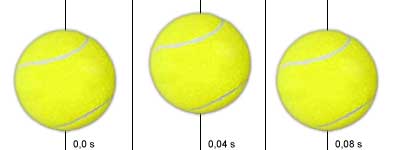

Three snaps of the flight of a ball shot recorded in 25p

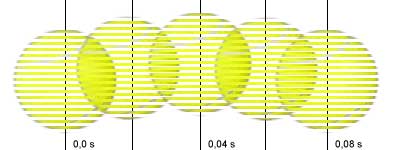

Snaps shot and pictured in 50i

source: Michal HrabÚ: FilmovÕ efekty II - 3. dÚl: Jak na export videa nejen v Adobe Premiere

Video resolution

NTSC and PAL

NTSC (National Television System Committee) is a predominant standard for analog video in the North America and Japan, while in Europe it is PAL (Phase Alternation by Line). Both standards came from the television industry. NTSC has got a resolution of 480 horizontal scan lines and frequency of 30 images per second. PAL has got a higher resolution of 576 horizontal scan lines, but a lower number of images per second. The whole amount of information per second is the same for both standards.

Various NTSC resolutions

Various PAL resolutions

VGA Resolution

VGA - Video Graphics Array is a system of displaying graphics first introduced by IBM for PC. The resolution is defined as 640æ480 pixels, which is very similar to NTSC and PAL. In standard conditions VGA is a more suitable format for net camcorders, because their shootings are mainly processed on PC monitors that use VGA resolution (or its multiplies). Quarter VGA (QVGA) with the resolution of 320æ240 is also quite frequently used format. Very similar is XVGA (1024x768 pixels) and 1280x960 pixels - 4-times larger than VGA - offers megapixel resolution which also belongs to the resolutions that are based on VGA.

MPEG Resolution

Resolution is usually:

- 704æ480 pixels (TV NTSC)

- 704æ576 pixels (TV PAL)

- 720æ480 pixels (DVD-Video NTSC)

- 720æ576 pixels (DVD-Video PAL)

MPEG Resolution

source: Netcam.cz

Aspect ratio

Usually given in fractions (4:3, 16:9) or as a number 1.33, 1.78, 2.35 etc. It is the real aspect ratio of an active image (without black stripes) and playback is affected by this.

PAR (Pixel Aspect Ratio) - aspect ratio of a dot. It describes how many times should a video using non-square pixels be spread out. For example for 4:3 DVD the PAR is 1.0926, for 16:9 it is 1.4568.

DAR (Display Aspect Ratio) - (Display Aspect Ratio) aspect ratio of an image. It is a piece of information in a video stream, denotes in which aspect ratio the video should be drawn. For DV and DVD the value can be 1:1, 4:3, 16:9 and 2.21:1. The first and last values are used rarely. DAR does not say anything about the real aspect ratio (AR), because there can be black stripes within the image.

LB (Letter Box) - a mail box. It is a way of displaying a wide-screen video on a standard 4:3 television (or vice-versa). Black stripes are added to the image.

PS (Pan & Scan) - insert and follow. It is another way of displaying 16:9 video on a 4:3 TV (or vice-versa). The image is enlarged and trimmed.

source: JeCh Webz

Data flow

Bitrate (data flow) quotes the number of bits per second processed by a video-recorder while playing a video. Bitrate of a file is a defined number of bits per second of an image (and sound as well) that is used for encoding. Generally and simply the more bits per second is used for compression the better quality the video is.

Subdivision

- CBR - constant bitrate, or a constant data flow the data flow is the same (constant) during the playing. It is simple for compression, but the data flow is the same also in places where it is not necessary (a still image with no movement) and occupy the same space on the disc unnecessarily. Codec keeps the same rate no matter how much is really needed. If an audio recording is made with the constant rate, it means that a half of the composition is exactly in the middle of the file.

- VBR - variable bitrate, or variable data flow here the compression ratio changes according to the complexity of the scene, with a fast movement of an image the compression is the smallest (and thus the data flow is the biggest). On the other hand, for less complex passages less data are used. The benefit of this is, that we can obtain a strongly higher quality of an image at the same average data flow than at the constant data flow. The drawback is, that we cannot calculate the size of the final file precisely (selected bit-rate represents mean and the codec rarely strikes it precisely). Generally is counted that for VBR it is possible to record more data on one disc (longer video) than for CBR with the same picture quality. This type of coding is used for MPEG-4.

source: Video na PC

Most Used Video Formats

MPEG

Moving Picture Experts Group the denomination for a group of standards used for encoding/decoding images and sounds by means of compressive algorithms. The aim of this work was to standardize the methods of compression of a video-signal. All the MPEG compressions use a discreet cosines algorithm.

source 1: VladimÚr PreclÚk: VideoformÃty

source 2: Svšt hardware

MPEG-1

MPEG-1 was used and is still sometimes used to record images and sound on Video CD discs. MPEG-1 format was finished in 1991 and as a standard was accepted in 1992. It was designed to work with images of 352x288 dots, 25 frames/s (derived from PAL) or 352x240, 30f/s (derived from NTSC) with the data flow of 1,5 Mb/s, that were considered as optimal but in maximal values non-accessible. With these parameters it corresponded to a VHS format, but in a digital form for CD. MPEG-1 format has become a part of the White book which is derived as a standard for recording a video on CD (72 minutes of a video). Nowadays MPEG-1 for a video is sometimes used for the Internet presentations where its quality is enough for an informative quality. From MPEG-1 a sound format MP3 developed and became probably the most known format for sound. Thanks to personal MP3 and the Internet this format has become the most wide-spread compressive sound format for audio which is used at home.

source 1: VladimÚr PreclÚk: VideoformÃty

source 2: Ond½ej Beck: MPEG

MPEG-2

MPEG-2 format has become a standard for compression of a digital video. The biggest advantage is a perfect technical documentation, general compatibility and a big broadness. MPEG-2 format was finished in 1994. Nowadays the MPEG-2 is mostly used to compress a video. It is used as a compressive algorithm for DVD. Moreover, MPEG-2 is coded for DVD by two different methods either by a constant bit rate (CBR) or a variable bit rate (VBR).

source 1: VladimÚr PreclÚk: VideoformÃty

source 2: Ond½ej Beck: MPEG

MPEG-4

MPEG-4 standard was designed for extreme low data flow smaller than 64kb/s. Nowadays a number of codecs appeared in connection with MPEG-4 that enable to make a digital video smaller with only a small change of the image. It is mainly the codec DivX that is not accredited officially. The others are XviD or WMV and VMA formats by Microsoft. WMV by Microsoft is available in WMV HD versions for recording a video in a high resolution of 1080 lines. Quick Time and Real Video formats also belong among MPEG-4. These are mainly used to download film trailers from the Internet. It is used not only in the Internet, where the smallest size is needed because of the speed of transfer, but also for a video on CD. MPEG-4 format works with three setting levels - low, middle and high, that enables to change the data flow and by means of this to lower the size and to optimize streams. It also works in two modes VBR and CBR.

source 1: VladimÚr PreclÚk: VideoformÃty

source 2: Ond½ej Beck: MPEG

WMV

Windows Media Video (WMV) is an audio data compression technology for several codecs developed by Microsoft©. The original WMV codec, known simply as WMV, was conceived as a competitor to the established Real Video for the Internet stream applications. The other codec, e.g. WMV Screen and WMV Image, dealt with a specialized content. WMV absorbed also formats such as HD DVD and Blu-ray disc during the standardization from SMPTE.

source: Wikipedie otev½enà encyklopedie

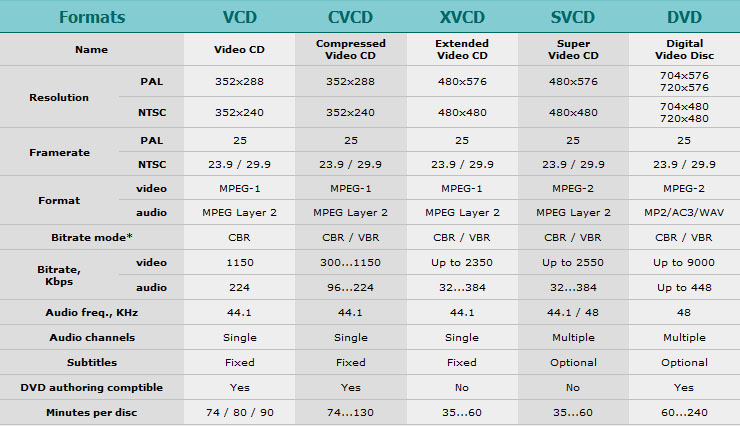

DVD Compatible Formats

DVD Compatible Formats (source: divxland.org)

Mostly Used Audio-formats

MP3

MP3 (MPEG-1 Layer 3) is a lossy audio format, based on compressive algorithm MPEG (Motion Picture Experts Group). Retaining a high quality it enables lowering the size of music files in CD quality for about a tenth. For a spoken word the results are much worse.

source: Svšt hardware

WMA

Windows Media Audio (WMA) is an audio format developed as a part of Windows Media, originally introduced as a substitute for MP3 (which was patented and Microsoft have to pay for its implementation into Windows). Today it competes with the Apple AAC. WMA files exclusively use ASF container and have the suffix .asf or .wma.

source: Wikipedie otev½enà encyklopedie

OGG

It is a data format promoted by Xiph.org foundation. It was designed as a material of bigger initiative whose aim was to develop components for coding and decoding multimedia

which will be free and freely re-implemented in software (BSD licence).

The format consists of pieces of data each called an ogg.box Each box begins with the OggS string to identify the file as an Ogg format. A serial number and a page number in the running head identify each page as a part of series of pages making up a bit-stream.

Different parts of the project are meant as alternatives to non-free standard such as codec MPEG, Real, QuickTime, Windows Media; RIFF formats WAV and AVI.

source: Wikipedie otev½enà encyklopedie

AAC

Advanced Audio Coding (simply AAC) is an audio format, which was developed as a logical adherent of MP3 format for middle and higher bitrates in the frame of MPEG4 standard. AAC format is not unified and contains several profiles, upgrades etc. There are also several encoders (mainly proprietary) that differ in quality very much. In hardware it has been successful mainly in Apple iPods. The new firmware (2.0) of PSP (Play Station Portable) also has support for AAC.

source: Wikipedie otev½enà encyklopedie

Codec

A codec (a compound of coder/decoder or compressor/decompressor taken from

the English codec of analogical origin) is a device or a computer program capable of transforming a data stream or a signal. Codecs store data into a coded form (mainly for transmission, storage or encryption) but nowadays they are more often used for decoding the data in more or less the original form for viewing, or for other manipulations. Codecs are a basic part of software for editing multimedia files (music, films) and they are often used for videoconferencing and streaming multimedial data. A decoder is also often named as a codec but video players do not use codecs for their work.

They do not have the first part of this program - the encoder. They only play

the video (unlike programs for editing video).

The same can be said vice

versa. We cannot speak about the program x264 as about a codec because it is

only able to compress a video but is not able to decode it. It should be said properly that x264 is a coder for MPEG-4 AVC (H.264) format and on the other hand e.g. Media Player Classic includes an integrated decoder

for MPEG-2 format. The word codec is then used exceedingly and it usually means a format, a coder or decoder. This practice is common not only in the Czech Republic but also abroad where the word codec is also used in incorrect coherence very often. A hardware endec works on

a similar principle (from the English encode and decode).

source: Wikipedie otev½enà encyklopedie

Format vs. Codec

Codec and format are often mixed up and this confuses users. In some cases it is problematic because the name of a codec is the same as the name of a format. Examples of such codecs are Lagarith, HuffYUV or WMV. Yet it is necessary to distinguish this mainly for those codecs which name is not the same as the name of format they produce. This is the case of the two well-known codecs - DivX a Xvid, which both work with the same MPEG-4 ASP format. This means that they are compatible (a video coded by one of them can be decoded by the other one). Electronics producers often declare support for DivX. This definition is not correct. It should be said correctly that such a device supports MPEG-4 ASP. It is able to play a video created by any codec whose output is an MPEG-4 ASP. Codecs cannot be mixed up with so called containers as well. A container enables to store audio, video and other data in one file in files with suffixes .ogg, .mpg, .avi, mov etc. the information encoded by codecs is only stored. Containers differ in formats they can contain. E.g. AVI and Matroska belong to universal containers.

source: Wikipedie otev½enà encyklopedie

Mostly Used Multimedia Containers

A multimedia container is a mean of storing various multimedia data (streams) into one file. It is a kind of an envelope for storing digital data that include the information about a stored video/audio format, used compression etc. In one file it is then possible to combine e.g. one video with more sound tracks (in different languages), several subtitles (in different languages) are joint and it provides their synchronization. A user can then choose which combination for playing he prefers. Containers differ in their ability to incorporate different multimedial data. A container does not inform about an inner compression of stored data and this is determined by the used codec. Some containers can include only a limited set of formats (e.g. MPEG). A container also includes information about the codec each data stream was encoded by.

source: Wikipedie otev½enà encyklopedie

AVI

AVI (Audio Video Interleaved) files became the standard of digital video since the begging of video presence in computers. The suffix AVI does not identify this file positively it only assignes it to a general group of files with video for Windows. The AVI file can be created in various encoding systems (codec) several of precise compressive and decompressive schemes are joined to the suffix of AVI file and have to be installed in a computer so that we are able to play such a video-file.

source: Video na PC

MPEG

MPEG is another frequently used format. It is designated, as the denomination says, for the MPEG video and sound, and enables either local data storage on a disc, streaming via the Internet, usage for a terrestrial or satellite transmission or also an interactive content. The format comes from the electronics producers and the main requirement was the simplicity so that it could be implemented easily in commercial sphere.

source: tvfreak.cz

QUICK TIME

This multimedia container (the AVI competitor) was developed by Apple and it can contain any codec, CBR or VBR. It takes the suffix .QT or .MOV. It offers carrying various types of information, for example Flash. The basic unit of this file is an atom, which can contain other atoms.

source 1: tvfreak.cz

source 2: MPlayer: Video formÃty

REAL MEDIA FORMAT

Real Media Format (RMF) is a comparatively old container, the suffix .RM, .RMF or .RV for video and .RA for sound are used. It was developed by Real Networks, which is interested mainly in streaming via the Internet, to which the file is determined. What is interesting about a video is that it can change the frame rate of the video during time to which the format of the container is adapted. If we use objects which are not recognized by the player, they can be skipped. The licensing policy of this company does not allow to use formats for other purposes that for playing, moreover, with its own recorder. Unfortunately, this leads in decreasing popularity of this format. It is possible to create RMF files in lot of programs.

source: tvfreak.cz

MKV

Matroka is a project that begun in May 2003. Its purpose was to create a universal multimedia container that would support as many compresses and other non-standard characteristics as possible. It can contain nearly any audio/video format and an unlimited number of sound/titles tracks. To play it, it is necessary to install a relevant splitter or to use a recorder which has support for the Matroka.

source: JeCh Webz

Additional Texts

Questions

- Which types of frame rates are used?

- Why is image interlacing used?

- What is the purpose of the resolution of video?

- Which aspect ratios do you know for digital video?

- What is the bitrate? How can it be divided?

- Which used formats for digital video do you know?

- Which used formats for digital audio do you know?

- What is a codec and what is the difference between a codec and a format?

- What is a multimedial container and how does it differ from a codec or a format?